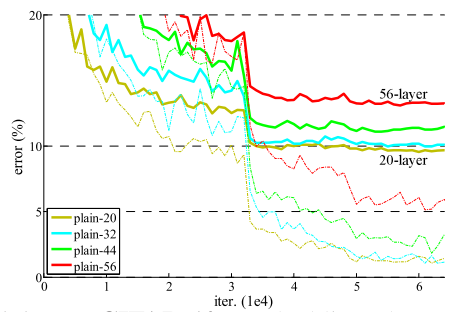

Islearning better networks as easy as stacking more layers?Īn obstacle to answering this question was the notoriousproblem of vanishing/exploding gradients, whichhamper convergence from the beginning. greatly benefited from very deep models.Driven by the significance of depth, a question arises: Similar phenomenaon ImageNet is presented in Fig. The deeper networkhas higher training error, and thus test error. Training error (left) and test error (right) on CIFAR-10with 20-layer and 56-layer “plain” networks. (1e4) t r a i n i ng e rr o r ( % ) iter. Many other non-trivial visual recognition tasks have also and. Recent evidence reveals that network depth is of crucial importance,and the leading results on the challengingImageNet dataset all exploit “very deep” models,with a depth of sixteen to thirty. Deep networks naturally integrate low/mid/high-level features and classifiers in an end-to-end multi-layer fashion, and the “levels” of features can be enrichedby the number of stacked layers (depth).

DEEP RESIDUAL LEARNING FOR IMAGE RECOGNITION SERIES

Deepresidual nets are foundations of our submissions to ILSVRC& COCO 2015 competitions, where we also won the 1stplaces on the tasks of ImageNet detection, ImageNet local-ization, COCO detection, and COCO segmentation.ĭeep convolutional neural networks have ledto a series of breakthroughs for image classification. Solely due to our ex-tremely deep representations, we obtain a 28% relative im-provement on the COCO object detection dataset.

We also present analysison CIFAR-10 with 1 layers.The depth of representations is of central importancefor many visual recognition tasks. This result won the 1st place on theILSVRC 2015 classification task. An ensemble of these residual nets achieves 3.57% erroron the ImageNet test set.

On the ImageNet dataset weevaluate residual nets with a depth of up to 152 layers-8 × deeper than VGG nets but still having lower complex-ity. We provide com-prehensive empirical evidence showing that these residualnetworks are easier to optimize, and can gain accuracy fromconsiderably increased depth. We explicitly reformulate the layers as learn-ing residual functions with reference to the layer inputs, in-stead of learning unreferenced functions. Wepresent a residual learning framework to ease the trainingof networks that are substantially deeper than those usedpreviously. Kaiming He Xiangyu Zhang Shaoqing Ren Jian SunMicrosoft Research Abstractĭeeper neural networks are more difficult to train. Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.ĭDeep Residual Learning for Image Recognition Solely due to our extremely deep representations, we obtain a 28% relative improvement on the COCO object detection dataset. The depth of representations is of central importance for many visual recognition tasks. We also present analysis on CIFAR-10 with 1 layers. This result won the 1st place on the ILSVRC 2015 classification task. An ensemble of these residual nets achieves 3.57% error on the ImageNet test set.

On the ImageNet dataset we evaluate residual nets with a depth of up to 152 layers-8x deeper than VGG nets but still having lower complexity. We provide comprehensive empirical evidence showing that these residual networks are easier to optimize, and can gain accuracy from considerably increased depth. We explicitly reformulate the layers as learning residual functions with reference to the layer inputs, instead of learning unreferenced functions.

We present a residual learning framework to ease the training of networks that are substantially deeper than those used previously. Deeper neural networks are more difficult to train.

0 kommentar(er)

0 kommentar(er)